Relative measures of effects can be misleading

Posted on 16th February 2018 by Gareth Grant

This is the twenty-fifth blog in a series of 36 blogs based on a list of ‘Key Concepts’ developed by an Informed Health Choices project team. Each blog will explain one Key Concept that we need to understand to be able to assess treatment claims.

We live in the age of big data. You can get statistics on almost anything you want from just a quick Google search, but can you trust them? We have infinitely more information available to us than any of our ancestors. However, great care must be taken to ensure we correctly interpret the data out there.

We live in the age of big data

Statistical claims in the media

This is especially relevant in the medical world and can be seen all around us, for instance in newspapers, where it seems anything can cause some form of cancer. The Daily Mail claims things such as deodorant, soup, sun cream and even oral sex, can all increase the risk of certain cancers to some extent. [1] These claims may be justified risk factors with trusted scientific evidence behind them, but we need to ask ‘to what extent?’ before we go telling people to stop doing, avoiding, or using all these things. It is vital to fact check rather than take everything that our friends share on Facebook as gospel.

If you were to read “women who use talcum powder every day to keep fresh are 40% more likely to develop ovarian cancer, according to alarming research” [2] you may be so frightened that you never touch the stuff again. However, this statistic alone doesn’t tell us much. We do not know from this;

- How many people use talcum powder?

- How many people were surveyed?

- How many women had ovarian cancer in each group (talc users vs. non-talc users)?

- This statistic also doesn’t tell us about many other factors that may be at play. In this example, the research showed that only postmenopausal women were at increased risk, when talcum powder was used directly on the pubic area, and that there were more obese individuals in the ‘talc’ group compared to the ‘no talc’ group. (This is really important because obesity is a known risk factor for ovarian cancer). [3]

The problem is that this statistic (40% more likely) is reported using relative risk. Relative risk is a way of reporting risk but only relative to the comparison group and not taking account for the total number of people or cases. This point is illustrated by Dr Jodie Moffat of Cancer Research UK: “It’s important to remember that very few women who use talcum powder will ever develop ovarian cancer.” [2]

In the news, in conversations, while browsing the internet, we’re exposed to numerous claims

Relative or Absolute risk?

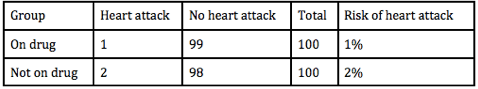

There are many different ways to measure risk. Relative risk, sometimes called the risk ratio, is good for showing the difference between two groups but can be misleading as it is independent of the original number of cases. For example, if you had:

- 100 people on a drug and 1 had a heart attack = 1% risk of heart attack

- 100 people not on the drug and 2 had a heart attack = 2% risk of heart attack

Then you might think – a difference of 1 person out of 100 isn’t very much.

However, relative risk is worked out by comparing the 1% and 2% and saying that “people not on this drug are 2x more likely to have a heart attack” or “this drug reduces your chances of having a heart attack by 50%”. This may be true and could be replicated in other trials, however, it may be that this drug is not as effective as it sounds and the difference could just be due to chance. For this reason, the fact that there was only a 1% overall difference between the groups should also be stated – we call this the absolute risk difference.

Number needed to treat (NNT)

Another useful measurement we use in the medical world is Number Needed to Treat (NNT). This is a measure of how many people need to receive a drug for 1 person to benefit from it.

In the example above, the NNT would be 100 as we found that we need to give the drug to 100 people to prevent 1 person having a heart attack. Drugs may have bad side effects or be very expensive, so if not many people are going to benefit from it, it may not be worth giving it.

Other considerations?

It’s also important to consider how likely a particular outcome is in the first place. For example, imagine a drug reduces the probability of you getting a certain illness by 50% but the drug also has harms, and your risk of getting the illness in the first place is 2 in 100. In this case, receiving the treatment is likely to be worthwhile. If, however, your risk of getting the illness in the first place is 2 in 10,000, then receiving the treatment is unlikely to be worthwhile even though the relative effect of the drug is the same.

Relative risk can be used for presenting risk increases for disease/risk factors (like the talcum powder example above) as well as for risk reduction for treatments. In larger samples, the risk ratio may not be dramatic but great care must be taken when analysing studies with smaller samples or studies looking at rarer illnesses. This is why it is vital to consider carefully everything you read, both in the newspapers and in scientific journals, until you have read all the facts. It may not necessarily be fake news, but it can still be misleading.

No Comments on Relative measures of effects can be misleading

Just found a mistake, that confused me: In the graphic, the rate of heart attacks is higher WITH the drug than without. Might be worth fixing…

23rd January 2020 at 2:25 pmThank you Georg, this has been fixed.

15th March 2022 at 8:44 amit’s pleasure to join

29th March 2018 at 4:46 pm